Computer

- History divided the timeline of mankind in two: BC (Before Christ) and AD (After Death of Christ). But considering the kind of impact the invention of computer has on mankind, one cannot be wrong reading the BC and AD as Before Computer (B.C.) and After Computer (A.C).

- It is hard to find someone belonging to the present generation who would disagree with this division of time, based on history before and after the computer.

- Emails, online chats, and internet calls have changed the world, in fact made it smaller. In the times after computer, almost everything has gone online.

- Shopping, internet medicine, gaming, social networking, travelling, hotel and ticket bookings, designing – interior or exterior, wedding planning, daily news, movies, music, operating domestic appliances, printing, insurance, community updates; appointment with a dentist, car washing company or a dog-walker; nannies for kids, admission to schools and universities, oh… the list is endless.

- Before computer could reach the common man at the dawn of the 21st century, life was comparatively slower. People lived at a time when most activities would take all the time one had, like going to the market to stock up weekly supplies, dropping mails in the post box on the way back home, queuing up outside ticket counters, paying bills and so on.

- But then like a superhero, computer saved us from all the tiring and time consuming ways of getting things done and helped us skip those steps and get things done on the click of a mouse.

- In fact, we have reached a point where a day without computer at work or home is unimaginable.

- The story of the invention of computer is not a 60 year old one; it dates back to the time when computer was a person. Click “Agree” here. An invention doesn’t come out of nothing. There are a series of minor developments that lead up to contribute in a major way to make an invention into phenomena.

- Considering the way computer has shaped this generation, it is more than an invention; it’s a phenomenal feat of mankind. Apparently, the phenomenon started out as a counting machine. One thing led to another and when mankind moved from counting to computing, it came up with ideas to shift the role of a computer from a person to a machine. Yes, it’s true, in the beginning the computer was a person.

- In the mid-seventeenth century, the word computer meant ‘someone who computes’. Because back then, scientists, mathematicians and astronomers employed people to carry out large calculations. It was a burdensome task. Hence, in order to save time and energy a computer (as in a person) was employed.

- But these computers weren’t hundred percent reliable. They left room for errors and errors in large calculations had costly ramifications. Thus, it was necessary to devise a way to get things sorted.

- The starting point of most inventions is man looking out for an alternative. Whereby rather than having to go through the effort of getting a task done by himself, he would have a person, a mechanism or a tool developed to do the same. Some of the alternates that he managed to find are simply amazing.

- Beginning with wheels; he discovered that wheels could not only help him travel faster, but also cover places his feet couldn’t. With tools and weapons, he discovered he could do things his bare hands couldn’t.

- Much later, with the engine as its heart, he built machines that could take care of his chores. Finally, to relieve his brains from the burden of memorising, calculating and computing, he built a Computer.

- “a programmable electronic device that can store, retrieve, and process data”, according to the Webster’s Dictionary (1980).

- The Techencyclopedia (2003) defines computer as “a general purpose machine that processes data according to a set of instructions that are stored internally either temporarily or permanently.”

These definitions could be nuts and bolts to chew for a few. So, in simple words, a computer is a machine that works on your command. It stores your information, and retrieves it when you want. It’s a machine that can get your work done faster.

Three dots on the timeline of history can be looked upon as the three stages to the making of a Computer.

Every invention in its own way is a milestone, but with the computer, it was like building another sun; a sun whose power inspired the launch of a satellite, automatic cars, machines, robots and internet.

Today’s computer was nowhere close to what its early predecessors were. The best way to understand the journey of computers from human to machine can be explained in three stages.

- Stage One: Early Computing Devices (3100 B.C. to 15th century)

- Stage Two: Base of Computer Technology - the Binary System (15th – 19th century)

- Stage Three: Four Generations of Computer ( 1930 – 1984 )

One look at these stages and an obvious understanding floats up that major developments have taken place within a small timeframe.

Ever since man felt the need to count livestock and members of his family or tribe, he would do it on his fingers and toes. As the early man led a nomadic life, counting made him feel secure. The feeling that you haven’t lost the things you owned yesterday, developed a sense of security in him.

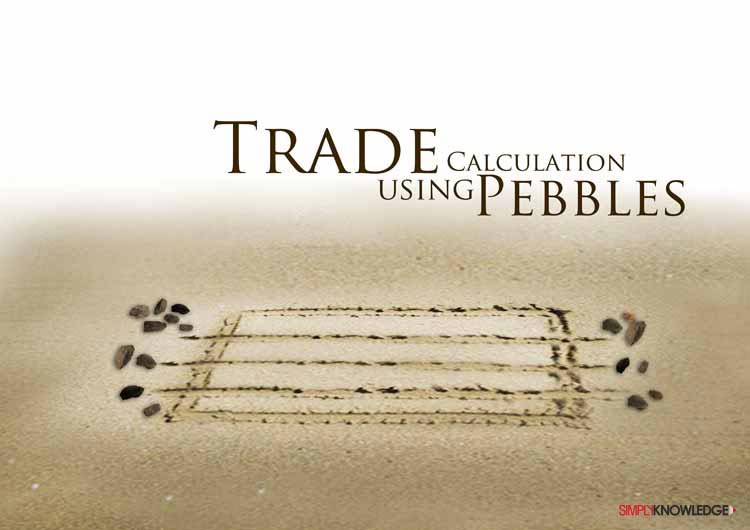

But when he found counting on fingers was not enough to meet his need, he began to use pebbles and sticks.

- Pebbles not only helped him keep inventory of his livestock, but also helped him trade with others.

- By finger or a stick, he would draw lines on the earth – say a rectangular box, with four to five horizontal lines in it. Each line would represent the different stocks, he intends to trade in. And at the beginning of each line he would place the number of stones representing the number of stocks he brings to trade.

- For example, if he has four cows, he would place four pebbles in front of the first line from down, 10 stones for 10 goats on the second line, five pebbles for five hens.

- Once he has agreed to sell say two cows, three goats and two hens, he would move the stones from the left side of the box to the right.

- This pebble arrangement later inspired the building of the calculating tool ‘Abacus’, but much before that man began writing on soft clay by means of blunt reed, using cuneiform script (system of writing).

Tablets and Counting Board

- At this point, technology entered human lives; man began converting natural resources into simple tools.

- The interesting part is, there never was an idea of building a computer instead there was a requirement of a device that would help one to calculate faster, and with precision. Thus, in the pursuit to build such a device, man’s earliest attempts took shape in the form of tablets and tables.

- As early as the Babylonian Civilization, cuneiform tablets like Plimpton 322 and Salamis Tablet were built to facilitate quick and easy ways of calculating numbers.

- The Plimpton 322 dating back to approximately 1,700 B.C. was a tablet, listing the triplets, what are now called Pythagorean Triples, i.e. integers a, b, c, satisfying a2 + b2 = c2. The tablet could solve quadratic equations.

- Tablets were used for reference as it had the tables of a2 + b2 = c2. Quadratic equation in 1700 B.C.? Well, these equations were to find the curve that objects take when flying through the air.

- After tablets came a counting device called “counting board,” which apparently is the precursor to Abacus. The Babylonians used these boards to assist with counting and simple calculations.

- The first use of Abacus, to be precise, the Sumerian Abacus was made in the year 2700-2300 B.C. It was constructed as a bamboo frame with beads sliding on wires, and was used to perform arithmetic functions like addition and subtraction.

- Some of the prominent civilizations across the globes have their own version of Abacus.

The Next Computing Device

- Even as the primitive man was busy adapting with nature. It was the sky that always intrigued him. He began to observe and study the phenomenon of lighting, rain, thunder, and storm. This study led him to dealing in calculations with large numbers.

- The early civilizations such as Babylonians, Egyptians, Greeks, Romans, Chinese, Indians and Maya preformed methodical observations of the night sky.

- But when it came to analyzing and computing data collected on basis of observations of the moon and the night sky, the need for a computing device began to be felt.

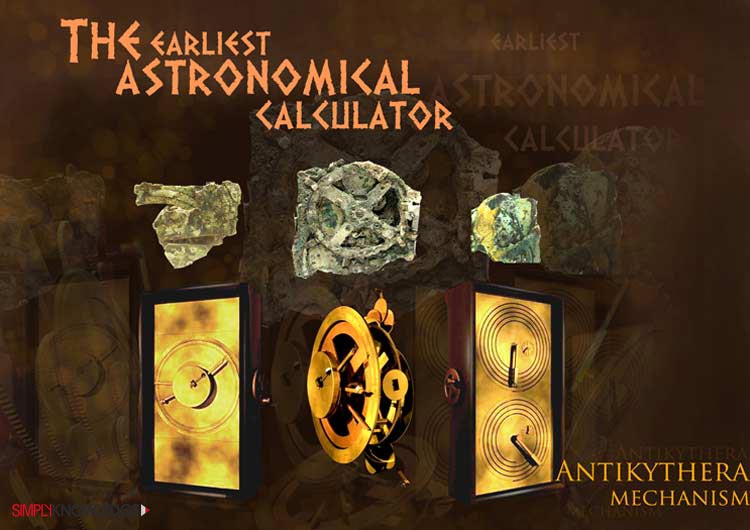

- However going by the records, for a long time there was no major calculating device invented or built. Until, the discovery of Antikythera Mechanism.

- The Antikythera Mechanism is the earliest astronomical calculator believed to have been built in 85 B.C. The interesting part about this calculator is that it was just discovered in the beginning of the nineteenth century.

- In the month of October 1900 A.D., a group of Greek sponge divers discovered a shipwreck off Point Glyphadia on the Greek island of Antikythera. The mechanism got its name from the place it was found.

- The researchers gradually came to realize, that the instrument is a mechanical calculator or an analog computer. It was designed to calculate the positions of planets and other astronomical cycles.

For another 1500 years, mankind continued to live by the clock with no major developments in the domain of calculating tools.

- At the start of the 15th century a new leaf was turned over. It was the beginning of the age of exploration.

- These explorations were backed by calculations and observations of natural elements like the position of the moon and the stars, tide, wave current, and other geological elements.

- The astronomical calculations were getting larger and larger. An efficient way of getting it done could reduce the consequence of human error.

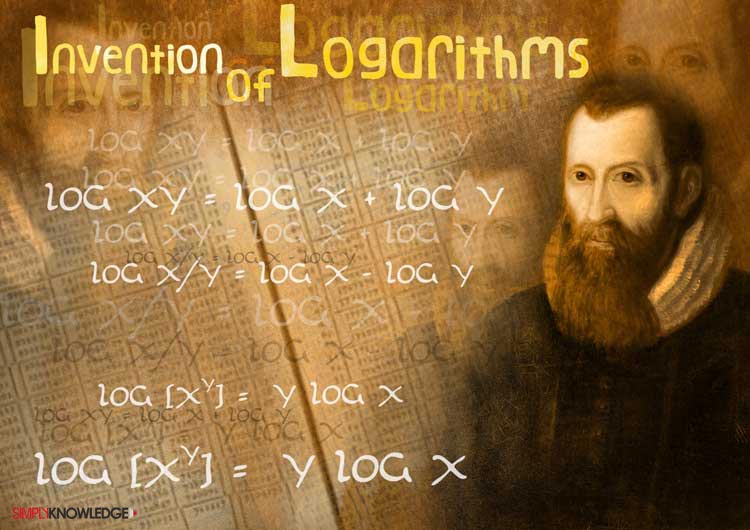

- And then in 1614, a Scottish astronomer and mathematician John Napier invented the Logarithms. A method that used tables of numbers (log tables) to undertake large calculations accurately.

- Now by referring to the log tables you can compute huge calculations faster.

- Soon the navigators, scientists, engineers, and other professionals adopted these tables to perform computation more easily.

- But then, as these tables were written by humans and used by humans, the room for error still persisted.

- Mathematical computation became more interesting during the 16th and 17th century with “Rulers” coming to the rescue. Rulers in this context meant the Sector and the Slide Rule.

- These scale rulers facilitated various mathematical calculations. It was used to solve problems in proportion, trigonometry, multiplication and division, and also for various functions such as square and cube roots.

- Besides, it also proved to be a mechanical aid in gunnery, surveying and navigation. Some of the popular mathematical computation aids used that time were wooden devices – Shickard, in 1621, and Polini, in 1709.

- With Renaissance as the backdrop, Europe was undergoing a change. The 17th century witnessed works of some of the heavy weight scientists.

- Galileo, René Descartes and Isaac Newton were the highlights of the century. Among these names, the name of Blaise Pascal also needs a special mention.

- After all, it was Pascal who introduced us to the first mechanical calculator. So, finally, here is a device that can do four arithmetic operations without relying on human intelligence.

- What really happened was this - Blaise Pascal, a French mathematician, physicist and religious philosopher wanted to ease his father’s job of tiring calculations and recalculations of taxes owed and paid.

- His attempt resulted into the successful construction of a mechanical calculator in 1642.

- It was called the ‘Pascal’s Calculator’ or the ‘Pascaline’. Although it was one of the first of its kind, it did not achieve commercial success.

- Gradually with time there were variations bought about to this mechanical calculator. And soon the mechanical calculator became a fashion.

Here Comes the Ones and Zeros

- The Binary System has existed since the fifth to the second century B.C. However, it did not find any major application.

- Historically, the first known description of binary numeral system was made by an Indian scholar, Pingala. He used binary numbers to attribute two forms of syllables – short and long.

- Later in the 11th century, a Chinese scholar and philosopher Shao Yong represented the fundamental elements of Taosim in binary numbers, the yin as 0 and yang as 1.

Mathematical Implications of the Binary System

- Mathematically, the binary concept remained untouched. Centuries passed and finally in 1703, in an article Explication de l'Arithmétique Binaire written by German mathematician and philosopher Gottfried Wilhelm von Leibniz, the binary system was mathematically explained.

- According to the Binary Arithmetic, the math is to be done using only two digits 0 and 1.

- Binary arithmetic gained popularity because it was easy to use, after all you were dealing with only ones and zeros. The math was different; it followed a different set of rules to carry out mathematical operations.

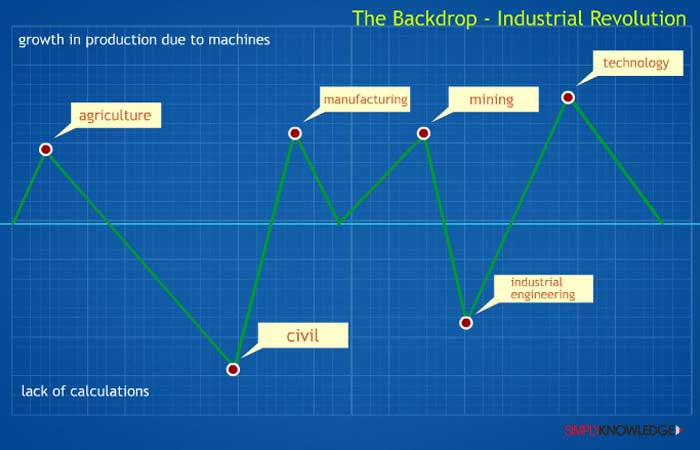

- By 1750, the backdrop was slowly changing; elements of Industrial Revolution were slowly beginning to surface. Many manual tasks were now being replaced by machines and this change began touching domains such as agriculture, manufacturing, mining, transportation and technology.

- Though it began in Great Britain, Industrial Revolution soon spread across Europe. The introduction of machines increased production and did well for the economy. But when it came to assistance in mental tasks, especially mathematical calculations, developments on that front were still lagging.

- Developments in civil and industrial engineering were heavily dependent on precision of calculation. As the production of the state was increasing, the trade of surplus led to navigating foreign shores.

- But navigation required exact calculation of tides, water current to assist transportation on waters and manage the sea traffic.

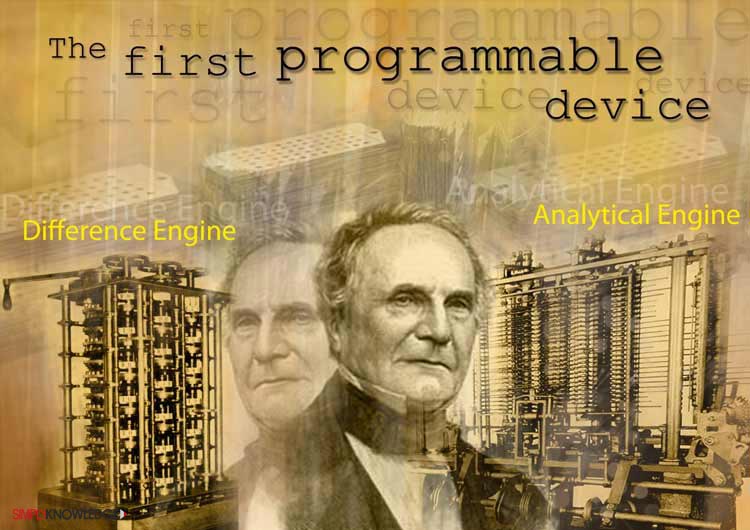

- In 1822, Charles Babbage, an English mathematician and mechanical engineer built an automatic mechanical calculator called the Difference Engine.

- The machine could compute astronomical and mathematical tables. It used the decimal system and would work on cranking up the handle.

- As it was time consuming and expensive, the British government was hoping Babbage could come up with something more economical. The government gave Babbage ₤1700 to start on the project.

Machine that made a Difference – Analytical Engine

After the Difference Engine project was scrapped by the government authorities. He began to work on the machine that went on to become the first mechanical general purpose computer, invented in 1837.

- The Analytical Engine was much like today’s computer; had a CPU, called the ‘mill’, that would process all the information and what is memory to today’s computer was called the ‘store.’

- The one feature of this machine that made it a revolutionary piece of technology is that it was the first programmable computing device.

- The interesting part is that Babbage used punch cards to program the machine. He had borrowed the idea of using a punch card from the textile loom which used it to make intricate embroidery designs.

- Babbage studied the working of the punch cards used in a loom, and saw how a series of punch cards could not only simplify the process of manufacturing textile with complex patterns, but also program it to obtain the desired design.

- The use of punch cards in the Analytical Engine, in a way was similar to the textile machine. The user needed to create the program, feed it on to the cards, insert them in the machine and let them run.

- It was Babbage’s this vision and endeavor that gave him the title – father of mechanical Computer.

- With the programming of punch cards, the use of software first came to be known.

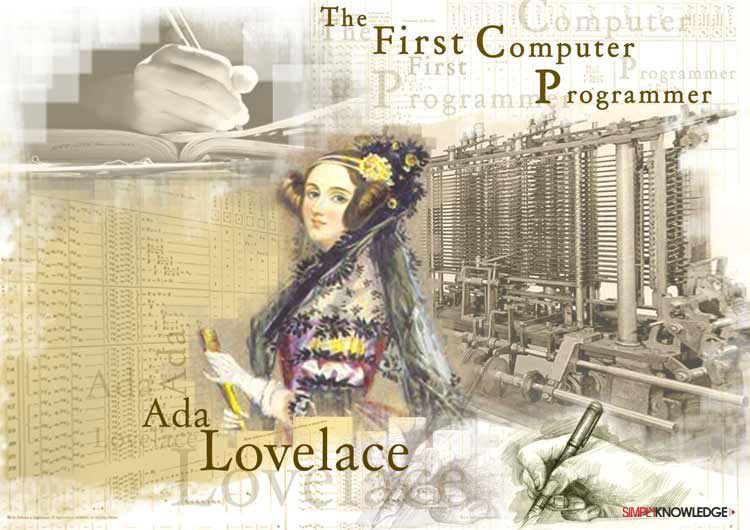

- On learning about the engine, Ada Lovelace, the daughter of the poet Lord Byron, an English mathematician and writer took interest in the machine.

- Babbage and Lovelace got to know each other at a social function and from there on would discuss Difference Engine and later, the Analytical Engine.

- In regular correspondence with Babbage, she would write notes on the engine and also what was later recognized as the first algorithm, in 1843. She is hence often considered to be the first computer programmer.

- It was Ada who explained to the other scientists the functioning of Analytical Engine.

- Despite the support from Ada, people failed to understand Babbage’s Analytical Engine. They could follow the functionality of the Difference Engine, but lost him while trying to understand the Analytical Engine, a futuristic device of the time.

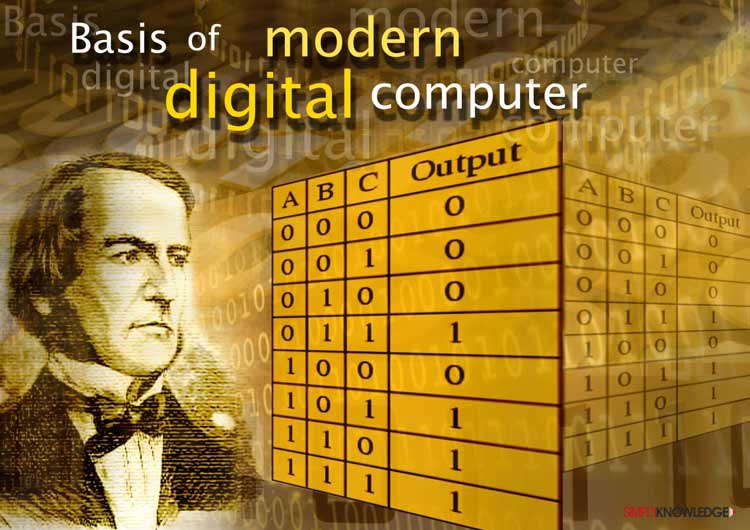

Boolean Algebra – the Base for Computer Science

- Logic! There have been theories of logic existing even before the times of Aristotle. Many cultures in history including China, India, Greece and the Middle East had their own theories of the same.

- However, it was Aristotle who gave it a place in philosophy. Come 1847, logic was revisited by British mathematicians – Augustus De Morgan and George Boole.

- De Morgan presented systematic mathematical treatments of logic. While Boole took the aspect of logic further by developing symbolic logic, a simplest kind of logic.

- Boole’s intensive and extensive investigations of logic resulted in developing the Boolean algebra. This algebra was different from the elementary algebra. If the elementary algebra was algebra of numbers, Boolean algebra was algebra of truth values that is 0 and 1. This algebra also known as Boolean logic went on to become the basis of modern digital computer.

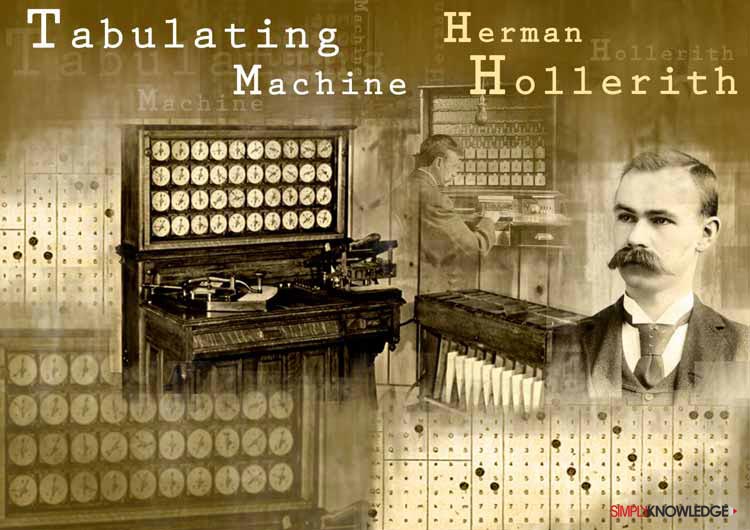

- Herman Hollerith was only nineteen when he worked on the 1880 US census. He learned the process was not only laborious and time-consuming, but also error-prone.

- While working on mechanizing the process, he tried the paper tape (perforated paper tape, a form of data storage), but soon switched to punch cards.

- The equipment he designed, that is the Tabulating Machine had a tabulator (a machine that arranges data in table form) and sorter (a unit of the machine that had the punched cards arranged in a sorted manner) whereby the data would be tabulated as well as sorted.

- Apparently, it was used to rapidly tabulate statistics from millions of pieces of data, used by the US census office to tabulate the 1890 census.

- The Hollerith’s tabulating machine turned out to be a success. As the tabulation of 1890 census was expedited in a year’s time whereas the 1880 census took almost eight years.

- In time to come, Hollerith’s firm merged to form the Computing Tabulating Recording Company and was later renamed IBM, the International Business Machines Corporation in 1924.

All said and done, the early twentieth century computer was yet a human, using simple components. He still used ten numbers and few signs to compute huge mathematical tasks with the help of mechanical computers available at that time.

The Boolean Connection

- Back then in 1930s, the telephones worked on switching circuit. A switch is a device that channels incoming data from multiple input ports to the specific output port. By doing this, it forwards the data to its intended destination.

- Ideally a switch can be in one of the two states, either ON or OFF. While studying the switch circuit for his master’s, an MIT (Massachusetts Institute of Technology) student Claude Shannon saw that the Boolean concept of truth values 0 and 1 can be used to design circuits.

- This observation of Shannon led to the marriage of the Boolean System with the Circuit. But in those days, circuits were used in telephones. Later when computer scientists used circuits in their computers, it marked the beginning of invention of computer.

- In the world of computers, it can be said when Boolean met Circuit, the Computer was born. However, this happened slowly over a period of time.

The Sultan of software Bill Gates once said, “I will always choose a lazy person to do a difficult job, because he will find an easy way to do it.”

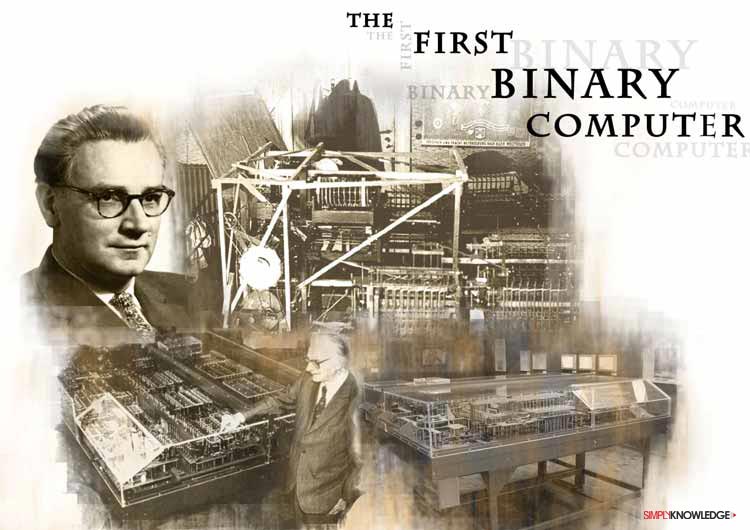

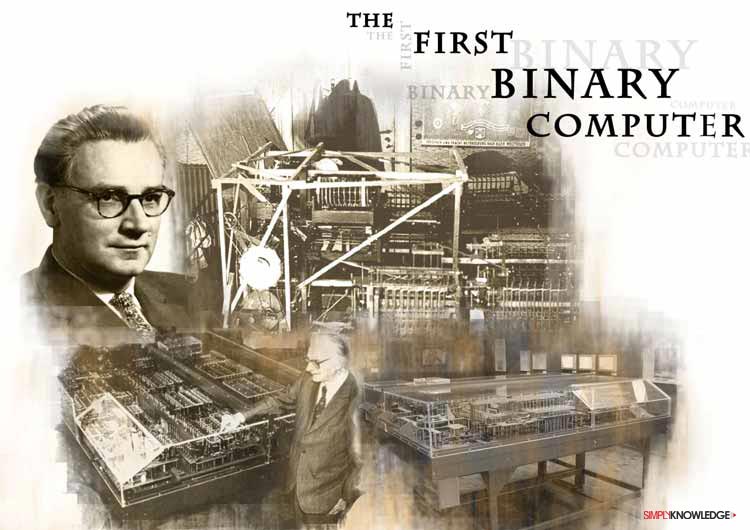

Chronologically, Gates’ quote came much later. In the early 1930s, there was one such lazy person who could fit Gates’ bill, a German civil engineering student Konrad Zuse. He detested calculating, hence worked on a way to skip the cumbersome process.

- While doing a large calculation using a slide ruler or a mechanical addition device, the tiresome part was keeping a track of the result you get after applying mathematical operations (addition, subtraction, multiplication and division) between two numbers.

- Zuse detested the laborious part of calculation; he wanted a device or mechanism that would take care of the part of keeping track. He was clear in his mind that his automatic-calculator device will have three components: a control unit, a memory unit and a processing unit, where the numbers punched, would be calculated.

- In 1936, Zuse built the first binary computer, Z1, a mechanical calculator that used groundbreaking technologies and had limited programmability.

- The Z1 was funded by Zuse’s parents, sister and well wishers. It was the first computer to be built between 1936 and 1938, but appallingly was destroyed in the Allied air raids on Berlin in December 1943.

Zuse’s Z series

- The Z1 was followed by Z2 in 1939. The Z2 had the same mechanical memory, but its arithmetic and control logic was replaced with electrical relay circuits.

- Despite of what Z1 was, when it came to operations it was unreliable, as the relay would blow-up easily. But this relay design was good enough to become the base for a series of computers(i.e. Z2, Z3, and Z4) that Zuse designed.

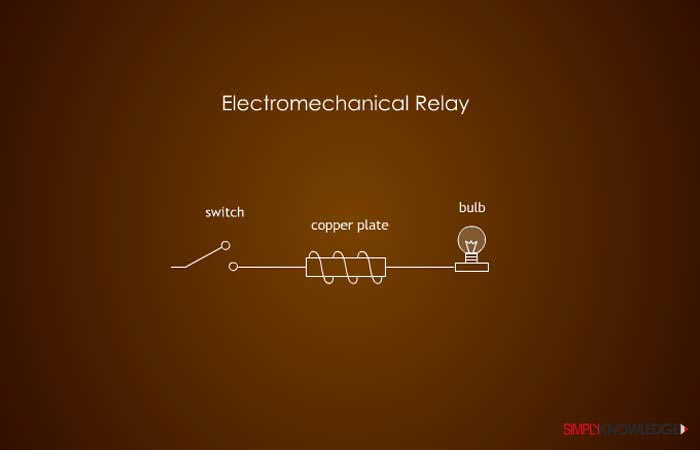

- An electro mechanic relay is a circuit where a wire on one end is connected to a switch, runs further and is coiled onto a copperplate which is connected to a bulb.

- In this relay, when the switch is turned ON, the circuit closes and the bulb lights up, this in binary is represented by 1.

- But when the switch is turned OFF, the circuit opens and the bulb goes off, this is represented by 0.

- In plain words, 1 is ON and 0 is OFF. The internal framework of the computer consists of a number of relays that goes on and off when the data is being processed. The Z2 had 600 relays, while the Z3 had 2000.

- With the use of relays, we made a transition from beads stacked up on counting board abacus to light beads in computer that light up in a relay while processing data.

In those days, electromechanical relay was widely used in the telephone industry. Zuse learnt about this circuit and its application. He knew that for his computer to support large calculations it will require a number of relays and apparently relays weren’t cheap in those days. With the help of his family and friends, Zuse continued to work on his endeavors. He kept working on various ways to have combinations of switches work, as a result, he landed up designing a mammoth looking device that occupied the entire living room of his parents’ flat on Wrangelstrasse.

- Given, the relays were highly unreliable, engineers and scientists had a tough time running bulky machine.

- The vacuum tubes soon replaced the relays as it could do the job more efficiently. They were more reliable and less space taking electrical component.

Vacuum Tubes in Computer

- Apparently, vacuum tubes were not new to the engineers as they were used in radio equipment and television.

- Thus, while improving on Z2, Zuse’s co-worker Helmut Schreyer suggested using vacuum tubes.

- The reason was the switching on and off in a relay used in Z1 and Z2 was manually done.

- With vacuum tubes the switching is done by electrons within the glass envelope of the tube. This would speed up the switching around thousands to two thousand times faster.

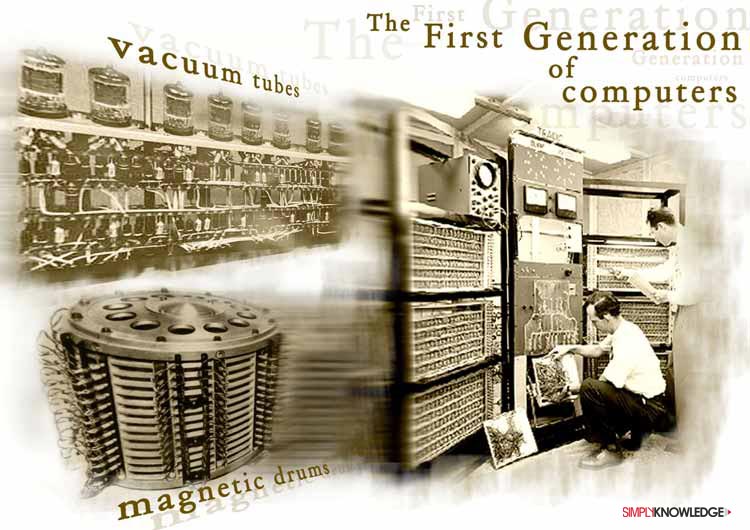

- The use of vacuum tubes in computer marked the beginning of the first generation of computer.

- Initially, Zuse thought it was a crazy idea, but with vacuum tubes coming onto the circuit, later in 1941, Z3 went on to become the first fully operational electromechanical computer.

- With the World War backdrop, the developments in building a computer were undergoing an instrumental change. The use of vacuum tube and magnetic drums in computer categorically got classified as the First Generation Computers.

- The other computer makers like UK’s Colossus Computers and Atanasoff-Berry Computer were among the first to use vacuum tubes and binary representation of numbers.

- The Z series computers were here to rock. If the arithmetic unit of Z3 was called a masterpiece, then that of Z4 was phenomenal. When Eduard L. Stiefel, a Swiss mathematician and a professor at Eidgenössische Technische Hochschule (ETH) Zurich inspected Z4, he declared the machine to be suitable for scientific calculations.

- On the European front, World War II made the air smoggy and dotted the skies with bombs and missiles flying across the spectrum.

- On 1st September 1939, Hitler invaded Poland marking the beginning of the Second World War. At the start of the war, the Nazis adopted a tactic that was virtually impossible to defend against.

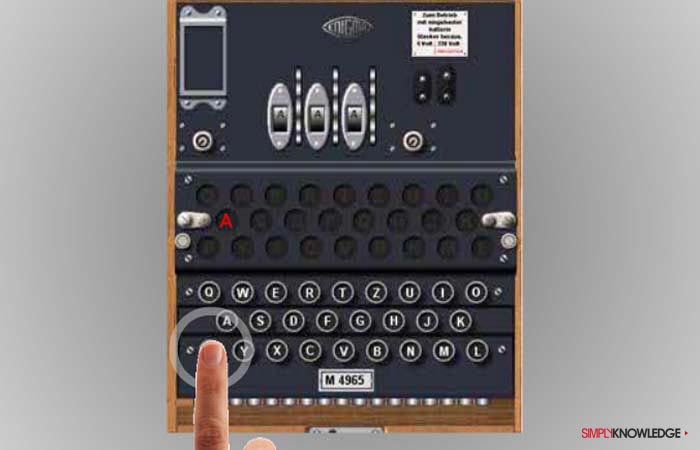

- The strategy was to guide German submarines to the target via radio which were coded and sent using a highly sophisticated Enigma machine.

- The Enigma Machine had a keyboard, a lamp board and a plug board. You hit a key on the keyboard and a corresponding letter on the lamp board would light up. The machine encrypted the communication in a way that seemed impossible to crack.

- Even as Hitler was scoring using the Enigma Machine, the British government deployed all its code-breakers to help read high-level German army messages.

- These coders used the Colossus computer to help decipher (decode) teleprinter messages that had been encrypted using the electromechanical cipher (coding) machine.

- Colossus was also the world’s first electronic, digital computer that was programmable. It was designed by engineer Tommy Flowers.

- Considering the use of this computer was highly secretive, Tommy Flowers and his team were deprived of recognition. Not only that, but their contribution could not even influence the development of later computers.

Weapons Demanded Precision

- Besides, the war also saw the use of newer weapons. One of the major tasks was of the gunners as they had to aim at the target at the horizon. The gunners thus would use firing tables and depending upon the range and target, they would accordingly trigger the projectile.

- These firing tables were calculated on the basis of test firing. The US military back home had spent hours and hours of research to calculate and compile a firing table.

- To calculate the path of a shell, a number of elements had to be taken into consideration. It took more than seven hundred multiplications, besides factors such as air pressure, wind force, direction and density.

- What could seem like shooting in the air, in reality were bombs that were aimed to destroy targets. Thousands of man-hours and a number of test firings were dedicated to calculate the precision of these projectile firing weapons. Thus a special computing group was set up at the University of Pennsylvania.

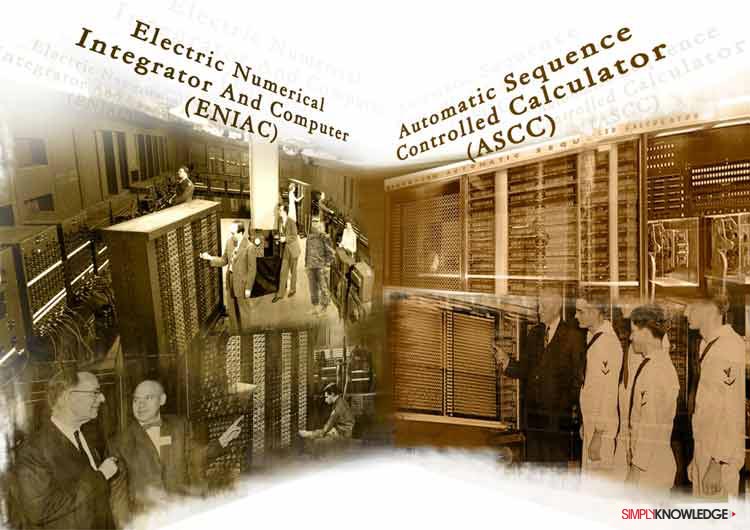

At the University of Pennsylvania

- The special computing group that was set up at the University of Pennsylvania worked on calculating artillery firing tables for the United States Army’s Ballistic Research Laboratory.

- The group designed the first electronic general-purpose computer – ENIAC (Electronic Numerical Integrator and Computer).

- The work on ENIAC was completed by early 1946 and the U.S. Army Ordinance Corps formally accepted the computer in July 1946.

- The United States Army financed the design and construction of ENIAC, during the World War II. The construction contract was signed in mid-1943 and its work on the computer began in secret by the University of Pennsylvania’s Moore School of Electrical Engineering.

- The computer boasted speeds that were one thousand times faster than electro-mechanical machines; it was a leap in computing power that matched with no single machines previously built.

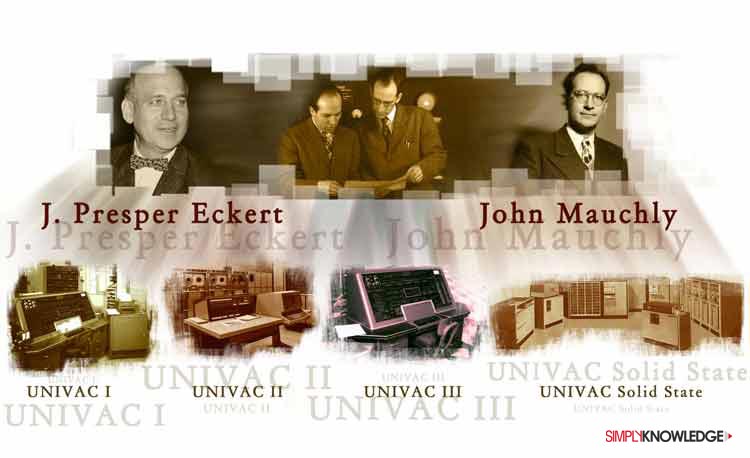

- ENIAC was conceived and designed by John Mauchly and J. Presper Eckert of University of Pennsylvania.

- It weighed more than 30 short tons and roughly covered 1800 square feet, and consumed 150 kW of power. The computer could only be operated in a specially designed room with its own heavy duty air conditioning system.

- Input for this humungous machine was fed using IBM card reader and for output it had the IBM card punch.

- ENIAC’s had this amazing mathematical power and general-purpose programmability that excited scientists and industrialists.

- It was later learnt that the subject matter of ENIAC was derived from the Atanasoff-Berry Computer.

- Apparently around the same time in 1944, the IBM Automatic Sequence Controlled Calculator (ASCC) called the Mark I was built by Harvard University.

- The electromechanical ASCC was devised by Howard H. Aiken, built by IBM and shipped to Harvard.

- If ENIAC was to serve the U.S. Army purpose, the Mark I would compute for the U.S. Navy Bureau of Ships.

But ENIAC had Few Shortcomings

- Like ENIAC, Mark I too was a huge computer that would occupy the entire room. In fact for another twenty years, computers were massive in size.

- One of the major shortcomings of ENIAC was reprograming it. You had to rearrange large numbers of patch chords and switches. The scientists worked on this for days and rectified the situation.

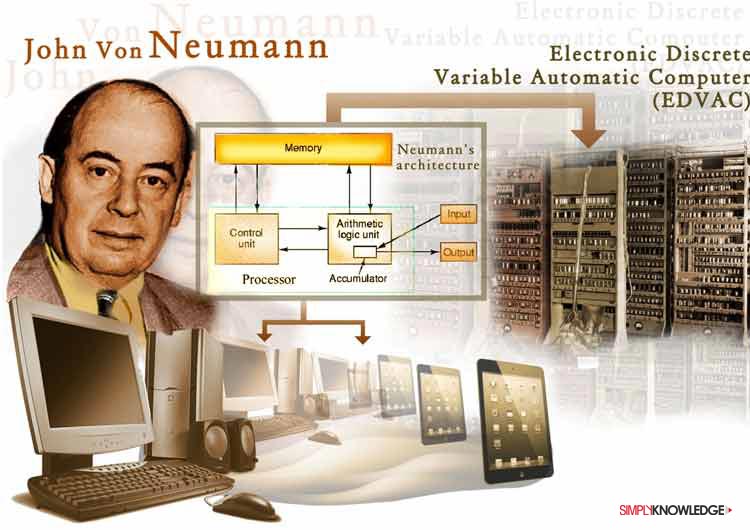

- For their next venture, the Electronic Discrete Variable Automatic Computer (EDVAC), in August 1944, Eckert and Mauchly teamed up with John von Neumann. The mathematician Neumann pioneered the aspect stored program.

- With stored-program concept, you can store instructions in the computer memory to enable it to perform a variety of tasks on your command.

- The EDVAC is one of the first electronic computers to have this feature.

- Besides, the EDVAC followed the Neumann Architecture because of which the program data and instruction data were stored in the memory.

- Whereas the computers that were designed under Harvard architecture had a separate memory for storing program and data.

- Like ENIAC, EDVAC was built for the U.S. Army’s Ballistic Research Laboratory.

- A number of projects took off that incorporated the von Neumann architecture also called the stored-program architecture.

- A less known fact is that Von Neumann’s design – the Neumann’s architecture was first used in making of hydrogen bomb. Later, it went on to be a prototype for modern computers right from desktops to iPads.

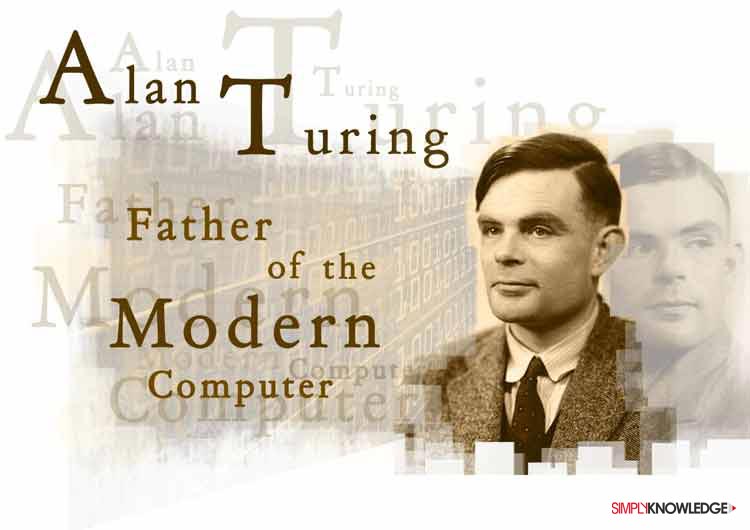

- Ideally, to perform a task, the computer opens an appropriate program stored in the computer’s memory. But with the early computers, to reprogram it was time and energy consuming. It was in 1936, Alan Turing a British mathematician, cryptanalyst and computer scientist who conceived the basic principle of modern computer – the Store Program.

- The Store program stores in the computer’s memory a program of coded instructions that would control the machine’s operation and make redundant the manual process of reprogramming the computer.

- John von Neumann studied Turing’s abstract of universal computing machine and tried applying the store program concept to the ENIAC. And Bingo! It worked out well.

- Turing’s universal computing machine of 1936 was a phenomenal invention. The machine consisted limitless memory in which both data and instructions are stored. Also, it had a scanner that moved back and forth through the memory, symbol by symbol, reading what it finds and writing further symbols.

- Turing’s contribution played a major role in modern computing and that is why he is called the Father of the Modern Computer. He is also the pioneer of Artificial Intelligence and Artificial Life.

- In 1946 over a patent rights dispute, John Mauchly and J. Presper Eckert parted ways with the University of Pennsylvania’s Moore School of Electrical Engineering. The duo went on to start their set-up called Eckert-Mauchly Computer Corporation (EMCC) based in Philadelphia.

- The EMCC built some of the defining computers of the time. Beginning with BINAC in 1949 and soon followed by a series of UNIVAC (Universal Automatic Computer).

- In early 1951, the UNIVAC I became popular as it had predicted the outcome of the U.S. Presidential Election. Computers now were looked up to for gigantic calculation. As far as the common man was concerned, the machine could tell them who would be their President.

- The ENIAC team was on a roll, as they regularly came up with newer computers. In March 1952 they build the UNIVAC; the first mainframe computer.

- Mainframe computers are computers primarily used by universities, corporate and governmental organizations for bulk data processing such as census and consumer statistics.

- After the success of UNIVAC I, a number of UNIVAC versions followed.

- The UNIVAC 1100 series models fell under the vacuum tube computers.

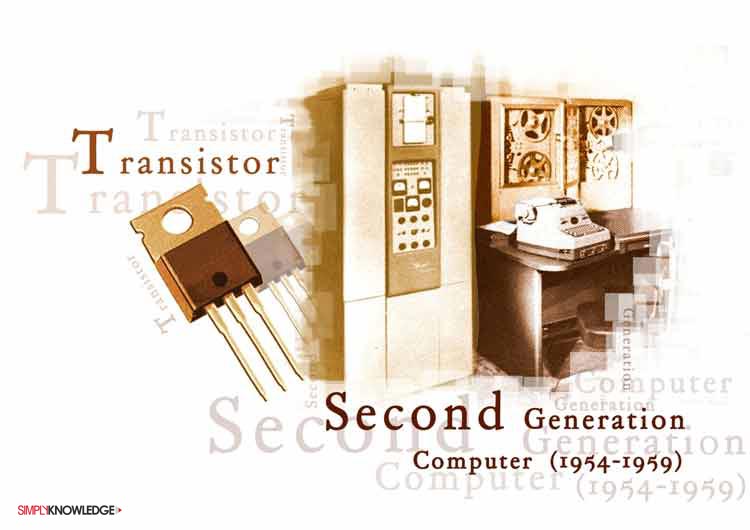

Second Generation Computer (1954-1959)

- Throughout the 1950s, vacuum tubes remained one of the most important components of computer. The computers created using vacuum tubes were called the first generation electronic computers.

- However, by the 1960s they were soon replaced by transistor-based machines which were smaller, faster, cheaper to produce, required less power and were more reliable.

- Computers created using transistors instead of vacuum tubes, in 1960s fell under the second generation computers.

- The second generation of computers was the shortest chapter in the evolution of Computers. With the inventions of integrated circuits we moved to the fast-paced computers.

- Also, the second generation computers moved from cryptic binary language to symbolic or assembly languages.

- That is, high-level programming languages like the early version of COBOL and FORTRAN were developed.

- These computers were the first computers used to develop the atomic energy industry.

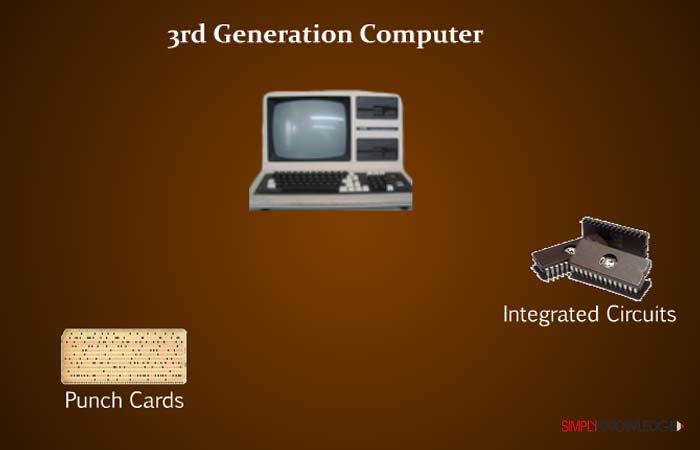

Third Generation (1959-1971)

- The second generation computers were transistor computers, there were computers that used a total of 200 point contact transistors and had reliability problems, its average error free run time was only 1.5 hours.

- It was only in 1957, that Jack Kilby, an electrical engineer working for Texas Instruments developed a body of semiconductor material, wherein all the components of the electronic circuit are completely integrated.

- It is called the Integrated Circuit. It contained a number of transistors and other electronic components.

- Compared to the 1st and 2nd generation computers, the 3rd generation computers were smaller in size. The reason being that they used integrated circuits and semiconductors.

- These computers also contained the operating system, a system that allows computers to run different programs at the same time.

- These computers were a remarkable feat, as they had moved from transistors to integrated circuits and from punch cards to Operating Systems.

- The integrated circuits revolutionized the entire world of electronics. It replaced the vacuum tubes. Some of the early integrated circuits were Small Scale-Integration (SSI), Large Scale-Integration (LSI) and Very Large Scale-Integration (VLSI), Ultra Large Scale-Integration (ULSI), and more to finally develop the advance integrated circuit or the microprocessor.

SABRE – Prototype of Buying and Selling Online

- With the third generation, the application of computers was soon touching different domains.

- In 1964, IBM developed the first airline reservation tracking system, SABRE (Semi-Automatic Business Research Environment) for American Airlines.

- When SABRE was being developed, for the first time, computers were connected together.

- Since the computers were connected, people could enter information from any terminal connected to the network and make their reservations.

- This system went on to become the prototype of buying and selling online and the entire concept of e-commerce.

Fourth Generation (1971-Present)

- Microprocessors are integrated circuits that contain thousands and millions of transistors.

- The first time a microprocessor was used in a computer was the Intel 4004; a 4 bit central processing unit released by Intel Corporation in 1971.

- The chip located all the components of the computer– the central processing unit, the memory, the input/output controls - on a single chip.

- It became the first complete CPU on one chip and also was the first commercially available microprocessor.

- The computers now could be linked together to form networks, this eventually led to the internet.

- The computers of this generation also saw the development of GUI (Graphic User Interface), the navigational tool – mouse and handheld devices.

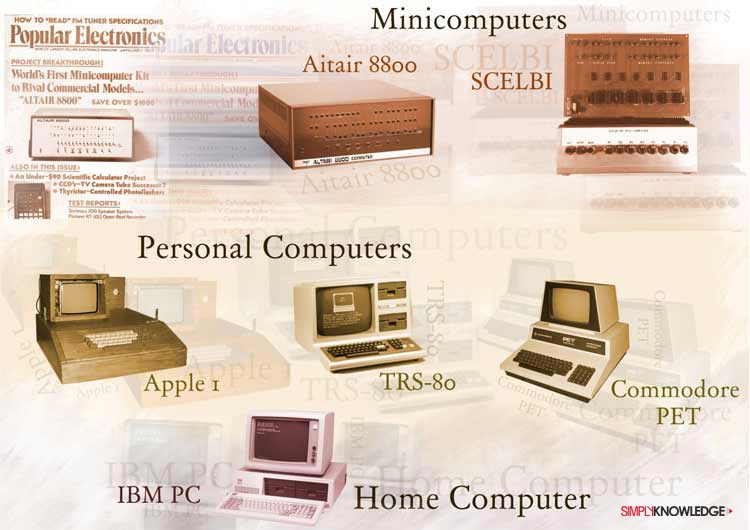

Altair 8800 – First Minicomputer

- In case you are wondering what about small computers, the minicomputer, well… until January 1975 the word minicomputer wasn’t heard about.

- The cover page of 1975 January issue of the magazine Popular Electronics flashed the news of World’s First Minicomputer Kit – Altair 8800.

- Apparently, look-wise the Altair 8800 was a rectangular box with a series of buttons on the face of it and a number of red lights.

- But, programming on Altair was an extremely tedious process. The user had to toggle the switches to operate and enter a code, and in response to the code, the lights on the machine would blink.

- Altair was not the only minicomputer. Two years earlier to Altair, the SCELBI sold hardware called the SCELBI-8H [visuals here].

- It came with random access memory and was available in two options: fully assembled and in a kit consisting of circuit boards, power supply, etc.

- Users would either buy the fully assembled machine, or the kit, and have it assembled later.

- But what was missing from these computers was the X factor. The minicomputer did not have a monitor, a keyboard, and an interactive format.

Personal Computer

- By the late 1970s Apple I, Tandy Corporation’s TRS-80 and Commodore Personal Electronic Transactor came up with computers that had a monitor, a keyboard, and an interactive format. These computers hit the market and made the computer personal.

- But what made computers happen everywhere was the IBM PC – Home Computer introduced in August 1981.

- In early 1980s, Apple came out with computers that had the navigational functionality and increased user interface with a device called the mouse. The developments in personal computer were happening at lightning speed. With time computers began to get smaller and lighter, oh yes, they became portable. Well, they are soon going to become wearable.

Computers today are in their fifth generation. Today’s computing devices are based on artificial intelligence and are making progress in applications such as voice recognition, AFIS (Automated Finger Identification System) and other biometric technologies. Quantum computation, molecular computing and nanotechnology are some of the promising technologies that are going to shape the future.

- Life, in the last six decades has moved faster than what a common man could ever imagine. And all of it happened with the invention of computer.

- Computers played an important role in the launch of the first Artificial Earth satellite – Sputnik 1, in 1957. But little did people know that IBM had already developed the computer reservation system, SABRE.

- The SABRE system went on to be the prototype application in service industry – hospitality, pharmaceuticals, banking, hospitals, education and more.

- IBM’s Deep Blue, chess-playing computer was another feat in the computer technology that came close to defying human intelligence, but lost to the chess world champion Gary Kasparov 4-2, with two wins in a six-game match.

- Computer technology became more powerful with the introduction of microprocessors and the internet.

- Microprocessors have found application in practically everything – from toasters to cars, refrigerators to electric oven, in fact in every domestic appliance and multifunctional business systems like printer, intercom system, fax, photocopiers and cell phones.

- Internet has redefined the lifestyles of the 21st century. Systems like GPS (global positioning system) help us with routes and maps while travelling. Emails, video chats and handheld computers has powered the generation with information that is just a click away. Although wearable computer is in the making, so is human microchip implant in the pipeline. Only time will tell, where the next generation is heading to, till then one may dabble between the online and offline world.

Long time ago, around third century, the Greek philosophers believed the earth was the center of solar system, and stars and planets moved around the earth, this description was represented in the Geocentric Model.

Centuries passed and in 16th century, Nicolaus Copernicus formulated a comprehensive Heliocentric Model that says the sun is the center of the solar system.

But today, if you take one cursory look around, you will find our lives are so governed by Computer. So, it wouldn’t be wrong suggesting a Computercentric Model, where the computer lies at the centre of our lives.